Guru's AI Agent Center

The AI Agent Center (AIAC) is your command center for managing Knowledge Agent performance and quality. It's where you review questions and answers, understand which sources are being used, and monitor automated verification decisions—all in one place.

Access RequiredOnly Owners or relevant custom roles can view data regarding their Knowledge Agent in the AI Agent Center.

All users can view questions they've asked in the Agent Center.

Best PracticesCheck out the below video for tips on using the AI Agent Center

The Questions Tab

The Questions tab shows you every question asked to your Knowledge Agent and the answers it provided. This is your primary tool for understanding how your Knowledge Agent is being used and where it needs improvement.

What you'll see

Each entry in the Questions tab includes:

- The question that was asked (in the last 90 days). Questions can be archived from the Agent Center using the archive button in the right side of the table.

- Questions you asked Guru. This includes questions asked using Guru's MCP Server.

- Questions asked to a Knowledge Agent where you’re assigned as the Knowledge Agent Owner or relevant custom role. This includes questions asked using Guru's MCP Server.

- Questions referencing Cards you're the verifier of (if no one is assigned).

- Questions individually assigned to you.

- The answer your Knowledge Agent provided

- Source chips showing which documents were used to generate the answer

- Feedback (thumbs up, thumbs down, or no feedback)

- When it was asked and by whom (if available)

Filtering and searching

Use the filters at the top of the Questions tab to narrow down what you're looking at:

- By Knowledge Agent (if viewing multiple agents)

- By feedback type (flagged, thumbs up, no feedback)

- By date range

- By asker (if available)

- By source used

You can also search for specific keywords to find questions on particular topics.

Viewing conversation threads

When users ask questions in chat, those questions are often part of a larger conversation. Thread Context lets you see the full conversational flow that led to each question, helping you understand the complete context behind user inquiries.

- In the Questions tab, any question that belongs to a chat thread will include a View chat thread button.

- Click this button to open a slideout panel on the right side of the screen that displays the entire conversation in chronological order, with the selected question highlighted.

The thread view is read-only, meaning you can't edit the conversation history. However, you can still interact with feedback elements like thumbs up/down within the thread view.

Reviewing answers and providing feedback

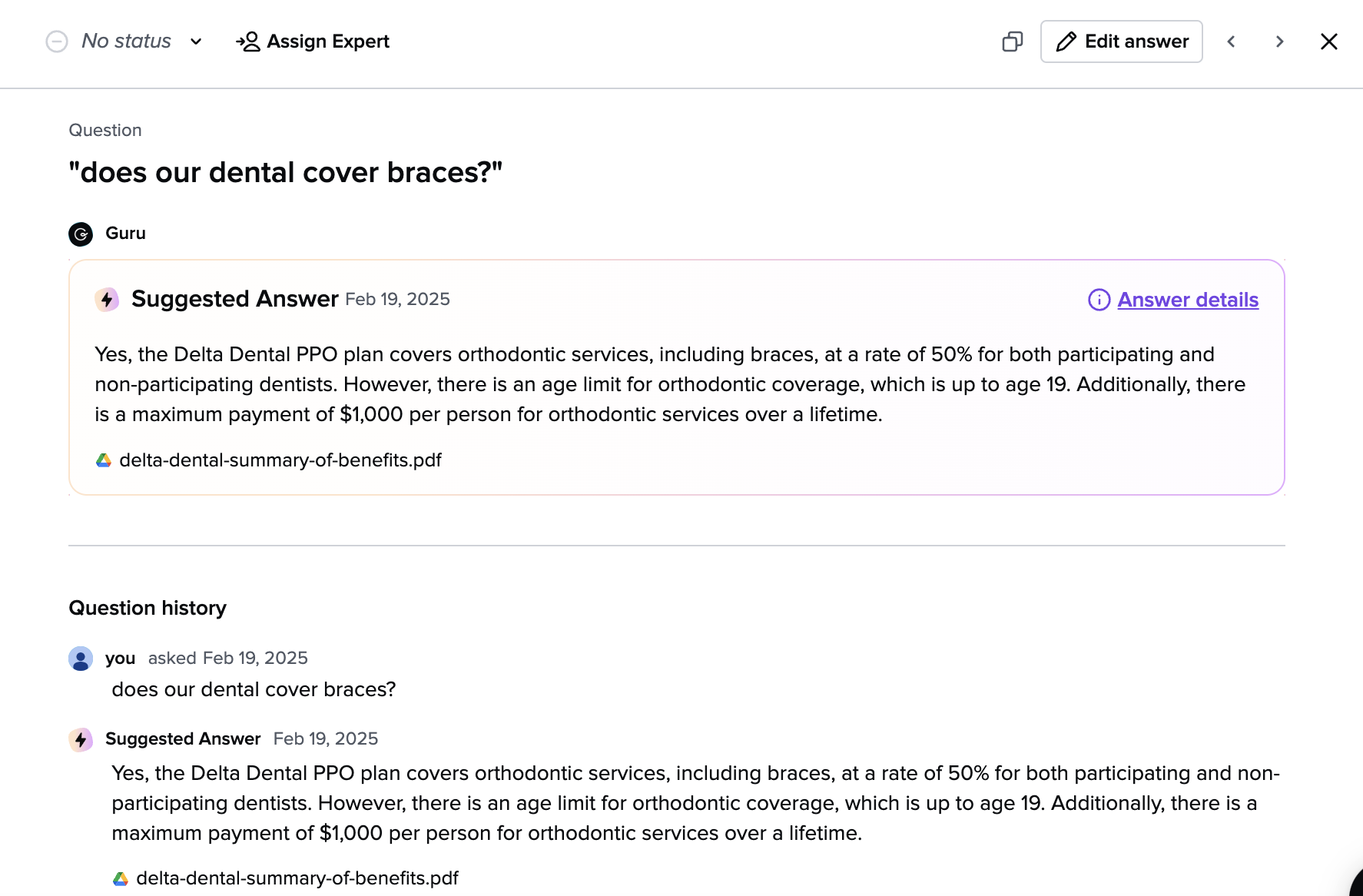

Click into any question to see the full answer and source details. From here, you can:

- Thumbs up the answer if it was helpful and accurate

- Flag the answer if it was incorrect, unhelpful, or used outdated information

- Edit the answer to show your Knowledge Agent a better response

- View the sources used to generate the answer

Your feedback doesn't just fix one answer - it teaches the system to make better decisions at scale. These signals feed directly into the automated verification rules that run daily.

Assigning a question to an Expert

To assign a flagged or incomplete answer for expert review:

- Click the question row to expand the details.

- Click Assign Expert.

- In the dropdown, choose an individual or Group to respond. Guru may suggest experts.

- (Optional) Add a note to provide context to the assigned expert.

- Click Submit. The Expert will be notified via their preferred notification settings . (If you are assigning to an Expert via Slack, they will see it in My Tasks but will not receive a notification).

NoteIf you see a user avatar in the "Sources" column, that user has marked the question as complete.

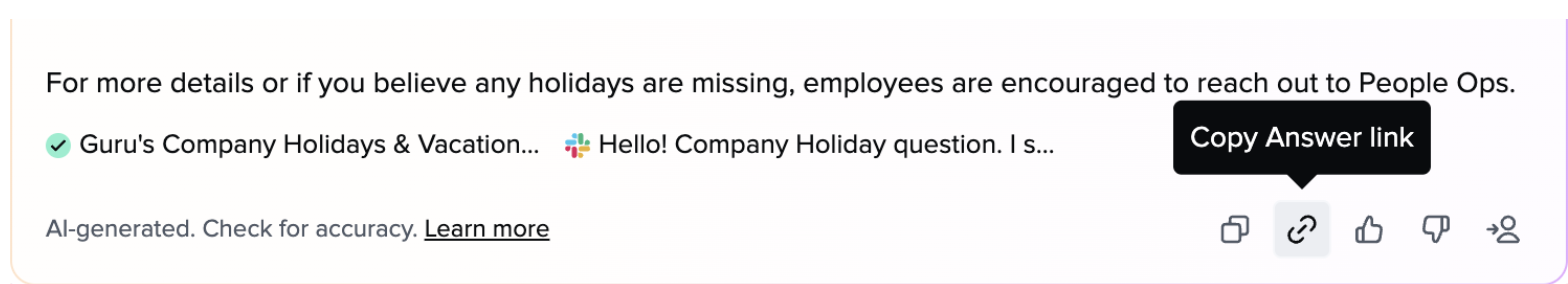

Sharing an Answer with another person

A link to the question is located at the bottom of every AI-generated answer in Guru. You'll find it positioned neatly between "Copy Answer" and the feedback buttons ("Thumbs Up" and "Thumbs Down").

When clicked, it instantly copies a link to the clipboard and shows a confirmation message. The link directs teammates to the link in the AI Agent Center, preserving the correct permissions and eliminating the need to manually dig or copy/paste content.

Editing a suggested Answer

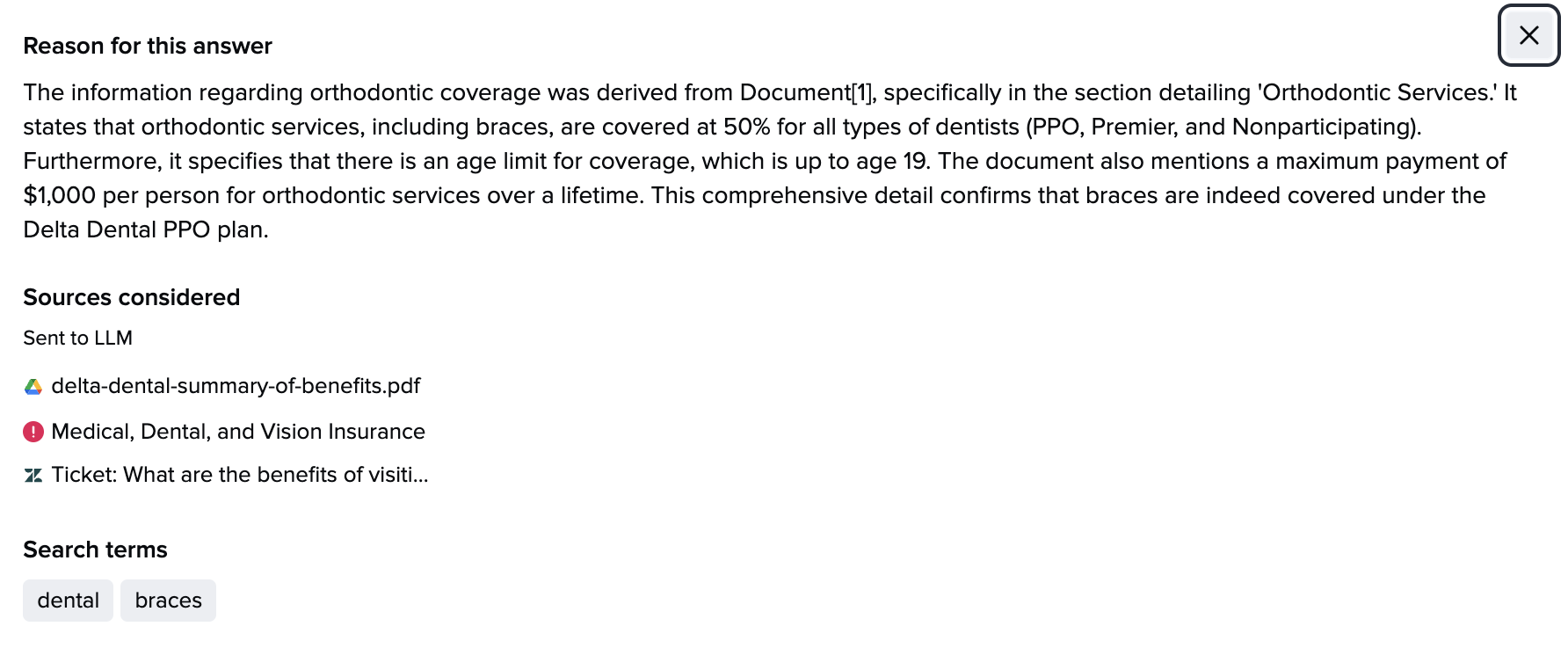

To update an AI-generated answer and ensure more accurate results in the future:

- Click the question row to expand the details.

- Click Answer Details to see the answer reason, sources, and search terms.

- Click Edit answer.

- Under Step 1, Select the sources to answer the question. Here, you will see the ability to:

- Search connected external sources (excluding Slack).

- Search and apply existing Guru Cards.

- Edit a source Card

- Remove a source

- Create a new Guru Card (+ New Card)

- Under Step 2, you will see the regenerated the answer. Review for improved accuracy and select 🔄refresh if needed.

- Click Save answer to finalize or Cancel to discard changes.

- The question will be marked correct, and the original asker will be notified of the new answer.

NoteIf you've added web search functionality to a Knowledge Agent, you can not use those web pages to train the AI. This is because Guru is doing a specific search in the moment of the question asked.

Viewing unverified sources that may be relevant

When your Knowledge Agent is configured to filter sources by verification status (using "Verified only" or "Verified and no verification status" settings), you'll see an additional collapsible section titled "Unverified Sources that may be relevant to this question."

This section surfaces sources that are relevant to the question but were excluded from the AI-generated answer because they haven't been verified yet. The section is collapsed by default to keep the interface clean.

When you expand this section, you can:

- Review unverified sources that could improve the answer

- Open a source to read its full content

- Edit the source content directly (if you have verification permissions)

- Add the source to the answer, which automatically verifies it and triggers answer regeneration

NoteThis section only appears when your Knowledge Agent uses source filtering for verified content and there are relevant unverified sources available. Users without verification permissions can view these sources but cannot add them to answers.

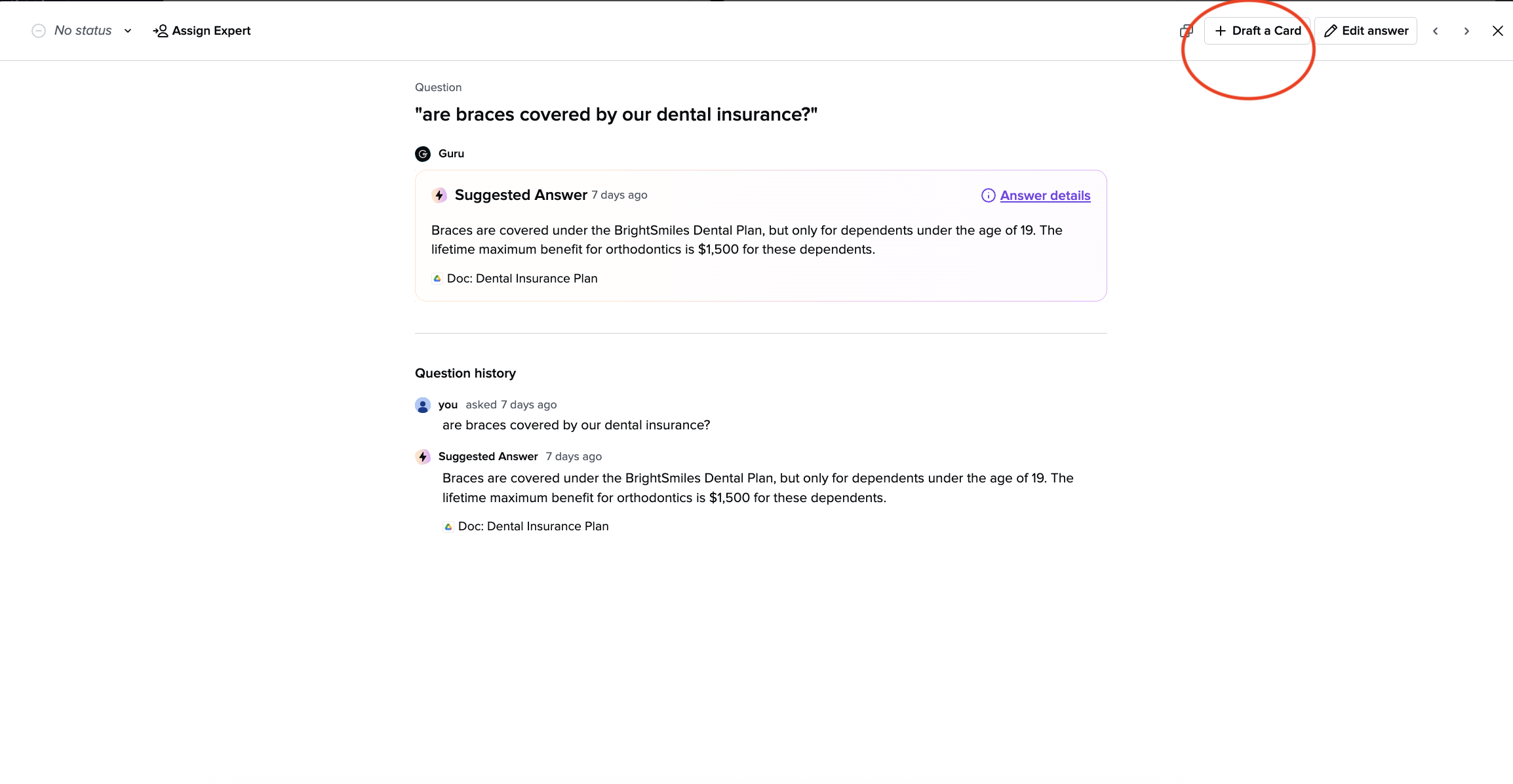

Creating a Card from an Answer

Draft Card from Answerallows you to quickly turn a high-quality answer from a Knowledge Agent into a draft-ready Guru Card. Only Collection Owners and relevant custom roles have access to this feature.

- Open a trusted Answer from the AI Agent Center

- Click Draft a Card

- The Card Editor will open in the same window, pre-filled with the question as the title and the Answer as the body.

- Edit the draft and save it just like you would with any new Card.

- Note: A Card created from an Answer is not tied to a specific Knowledge Agent or Collection. It’s a clean, standalone draft that the acan place wherever they have access.

Common use cases for the Questions tab

Investigating reported issues: When a user reports a bad answer, start here to see exactly what was asked and what your Knowledge Agent provided.

Training your Knowledge Agent: Look for questions with no feedback or low engagement to identify where your Knowledge Agent might need better sources or improved answers.

Understanding usage patterns: Review what questions are being asked most frequently to understand what knowledge gaps exist in your organization.

Quality assurance: Regularly review flagged answers to understand what's going wrong and whether sources need to be updated or removed.

The Sources Tab

The Sources tab shows you which content from your connected sources (Google Drive, Confluence, SharePoint, etc.) is being used to answer questions. This helps you understand what knowledge is most valuable and identify content that may need attention.

What you'll see

Each source listed includes:

- The source name and location

- Verification status (verified, unverified, or no status)

- How many times it's been used in answers

- Last used date

- Which Knowledge Agent(s) use this source

[Screenshot: Sources tab showing list of sources with verification badges]

Verification status indicators

Every source now displays a verification status with a visual indicator:

- ✓ Green checkmark (Verified): This source has been confirmed as accurate and current

- Gray indicator (Unverified): This source may be outdated or unreliable

- No badge (No verification status): This source hasn't been reviewed yet (default state)

Verification status helps you and your Knowledge Agent understand which content can be trusted. Depending on how your Knowledge Agent is configured, it may only use verified sources, or it may use verified sources plus sources with no verification status.

Filtering sources

Use the filters to focus on specific types of content:

- By verification status (verified, unverified, no status)

- By source type (Google Drive, Confluence, SharePoint, etc.)

- By usage (most used, least used, recently used)

- By Knowledge Agent (if viewing multiple agents)

Common use cases for the Sources tab

Identifying high-value content: Sort by usage to see which documents are being used most frequently in answers. These are your most valuable knowledge assets.

Finding unverified content in use: Filter to "unverified" sources and sort by usage. If unverified content is being used heavily, it may need manual review or updating.

Understanding coverage: Review which connected sources are actually being used versus which are just connected. This can help you prioritize where to focus your content maintenance efforts.

Spot-checking verification: Regularly review newly verified or unverified sources to ensure automated verification is making good decisions.

The Quality Tab

The Quality tab gives you transparency into automated verification decisions made by your Knowledge Agent. This is where all the feedback you provide in the Questions tab and the usage patterns you see in the Sources tab come together into automated maintenance decisions.

What is automated verification?

Automated verification is a system that keeps content current without manual intervention. It runs daily, reviewing Cards and source documents that have been used to generate answers. Based on verification rules (behavioral, content-based, and analytical), your Knowledge Agent automatically verifies or unverifies content. Learn more about automated verification here.

Auto-verify is enabled by default for all Knowledge Agents (existing and new). Auto-unverify is disabled by default, so you can enable it when you're confident in your rules.

What you'll see: The Quality Log

The Quality Log provides a running record of all verification events, showing you:

- Which documents were verified or unverified by your Knowledge Agent

- When the change happened

- Why the decision was made with clear reasoning based on your verification rules

- Confidence level (High/Medium/Low) indicating how certain the Knowledge Agent was in their determination of verification.

Understanding confidence levels

Each verification decision includes a confidence level that tells you how certain the Knowledge Agent was:

- High confidence: Strong, clear signals supported the decision (e.g., content thumbs-upped 10+ times with no flags)

- Medium confidence: Moderate signals supported the decision (e.g., content viewed regularly but limited feedback)

- Low confidence: Limited or ambiguous signals (e.g., content used only once or twice). Worth reviewing manually as the system did not have enough data.

Common use cases for the Quality tab

Weekly quality checks: Review the Quality Log once a week to spot any unexpected verification changes or low-confidence decisions that need attention.

Understanding automation impact: Track how many sources are being automatically maintained to demonstrate the value of automation to your organization.

Investigating verification issues: When a source unexpectedly becomes unverified, check the Quality Log to understand why and whether the decision was appropriate.

Refining verification rules: Review patterns in confidence levels and decisions to understand whether your rules need adjustment. If you're seeing many low-confidence decisions, your rules may need more specificity.

Evaluating AI Agent Center Analytics

The AI Agent Center transforms into a powerful intelligence hub when you use chat or research mode to conversationally query your AIAC dataset. Instead of manually reviewing individual questions and answers, you can ask natural language questions to uncover patterns, monitor issues, and assess automation ROI.

How it works

You can query your AIAC data through two modes:

Chat mode. Ask questions conversationally and receive the first 50 results per request for fast, focused insights.

Research mode. Toggle into deep research mode for more comprehensive exploration across multiple pages of results.

Filtering your data

The chat and research interface supports flexible filtering to help you analyze specific segments of your data:

- By Knowledge Agent

- By asker (person who asked the question)

- By time period

- By status (flagged, marked correct, not answered)

- By source used

Example use cases

Here are some ways teams use conversational querying to optimize their Knowledge Agents:

- Review all open or unanswered questions in the last 30 days

- Analyze flagged questions from the past week

- Identify top trending topics across the organization

- Surface questions containing sensitive or compliance-related terms

- Understand content usage by specific groups, such as questions asked by the engineering team

By surfacing actionable insights through conversational queries, you can continuously refine your Knowledge Agents, fill content gaps, and demonstrate the value of automation to your organization.

Frequently Asked Questions about the Agent Center

What’s the difference between Answers analytics and the expert dashboard?

- Answers Analytics (available to Admins, Knowledge Agent Owners, and relevant custom roles):

- View feature adoption and usage by Group.

- See all questions answered or not answered by Guru.

- Export results to CSV.

- Data includes question, asker, and date asked.

- AI Agent Center (accessible to Experts and Knowledge Agent Owners when assigned):

- See full question, suggested answer, sources, feedback, and actions taken.

- Limit: 90-day history.

- Not exportable.

- Used to update and train AI responses directly.

Will research appear in the AI Agent Center?

No, Research will not appear in the AI Agent Center. This can be found by the individual who conducted the research in the Chat window in the left hand column.

What does it mean when I see a lock icon and "Answer Hidden. You don't have access to it's sources"?

"Private source" means you don't have access to the source within Guru or the source has been deleted since the question was asked. If you have access to the source in Guru but you don't have access in its external source, (or you aren't logged into it) clicking the source's link will result in your access being denied.

How often does automated verification run? The automated verification system runs daily, reviewing all Guru Content and external content that has been used in Answers. No content is reviewed more than once within a 7-day window to prevent unnecessary review.

Why don't I see all verification events in the Quality Log? The Quality Log respects source permissions. If you don't have access to a particular document (for example, a Google Drive file you weren't shared on), you won't see verification events for that document.

What content is eligible for automated verification? Only content that has been used in an Answer is eligible for review. This ensures verification focuses on content that actually matters to your organization.

Want a closer look at some key Guru features and best practices?Check out our events page for demos, workshops, new release roundups, Getting Started bootcamp, guest panelists and more! For upcoming live events and a series of past recordings: Click here to register

Updated 18 days ago